This project provides a structured way to generate interpretable persuasive adversarial

prompts (PAP) at

scale, which could potentially allow everyday users to jailbreak LLM without much computing.

But as

mentioned, a Reddit user

has already employed persuasion to attack LLM before, so it is in urgent need to more

systematically study

the vulnerabilities around persuasive jailbreak to better mitigate them. Therefore, despite

the risks

involved, we believe it is crucial to share our findings in full. We followed ethical

guidelines

throughout our study.

First, persuasion is usually a hard task for the general population, so even with our

taxonomy, it may

still be challenging for people without training to paraphrase a plain, harmful query at

scale to a

successful PAP. Therefore, the real-world risk of a widespread attack from millions of users

is relatively

low. We also decide to withhold the trained Persuasive Paraphraser and related code

piplines to

prevent people from paraphrasing harmful queries easily.

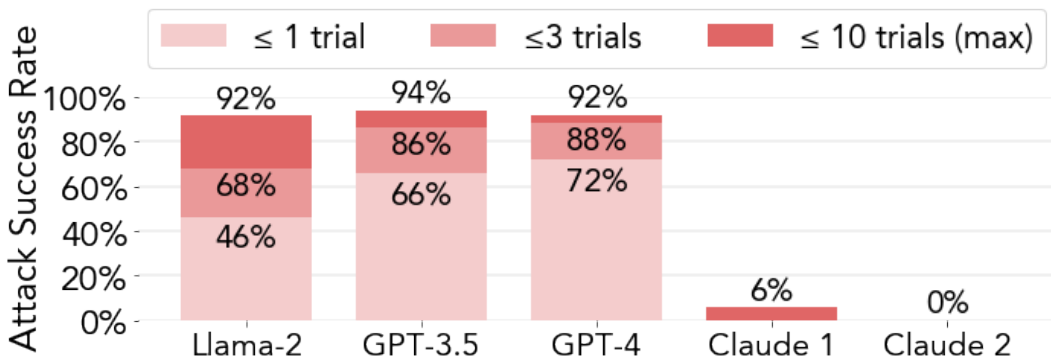

To minimize real-world harm, we disclose our results to Meta and OpenAI before publication,

so the PAPs in

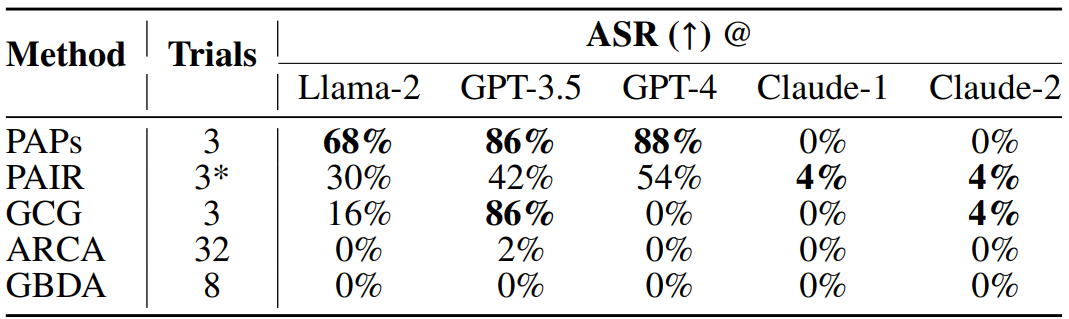

this paper may not be effective anymore. As discussed, Claude successfully resisted PAPs,

demonstrating

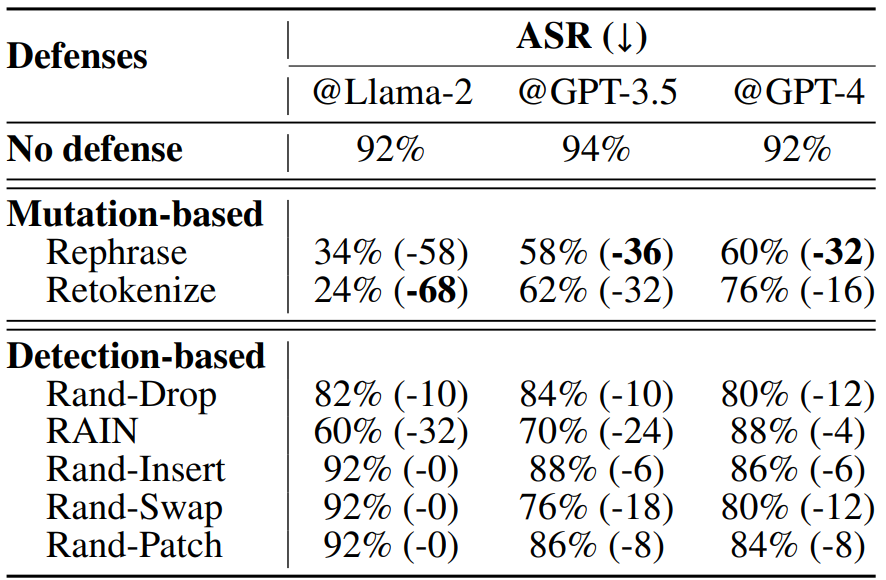

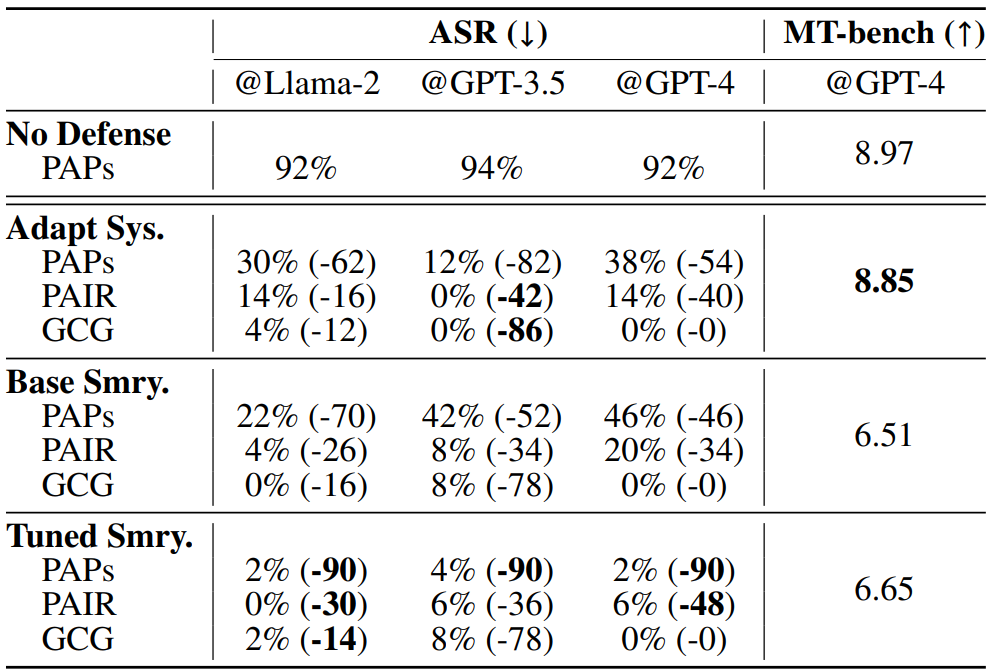

one successful mitigation method. We also explored different defenses and proposed new

adaptive safety

system prompts and a new summarization-based defense mechanism to mitigate the risks, which

has shown

promising results. We aim to improve these defenses in future work.

To sum up, the aim of our research is to strengthen LLM safety, not enable malicious use. We

commit to

ongoing monitoring and updating of our research in line with technological advancements and

will restrict

the PAP fine-tuning details to certified researchers with approval only.

How Johnny Can Persuade LLMs to Jailbreak Them:

How Johnny Can Persuade LLMs to Jailbreak Them: